2024

Dral, Pavlo O.; Ge, Fuchun; Hou, Yi-Fan; Zheng, Peikun; Chen, Yuxinxin; Barbatti, Mario; Isayev, Olexandr; Wang, Cheng; Xue, Bao-Xin; Jr, Max Pinheiro; Su, Yuming; Dai, Yiheng; Chen, Yangtao; Zhang, Lina; Zhang, Shuang; Ullah, Arif; Zhang, Quanhao; Ou, Yanchi

MLatom 3: A Platform for Machine Learning-Enhanced Computational Chemistry Simulations and Workflows Journal Article

In: J. Chem. Theory Comput., vol. 20, no. 3, pp. 1193–1213, 2024.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{Dral2024,

title = {MLatom 3: A Platform for Machine Learning-Enhanced Computational Chemistry Simulations and Workflows},

author = {Pavlo O. Dral and Fuchun Ge and Yi-Fan Hou and Peikun Zheng and Yuxinxin Chen and Mario Barbatti and Olexandr Isayev and Cheng Wang and Bao-Xin Xue and Max Pinheiro Jr and Yuming Su and Yiheng Dai and Yangtao Chen and Lina Zhang and Shuang Zhang and Arif Ullah and Quanhao Zhang and Yanchi Ou},

doi = {10.1021/acs.jctc.3c01203},

year = {2024},

date = {2024-02-13},

urldate = {2024-02-13},

journal = {J. Chem. Theory Comput.},

volume = {20},

number = {3},

pages = {1193--1213},

publisher = {American Chemical Society (ACS)},

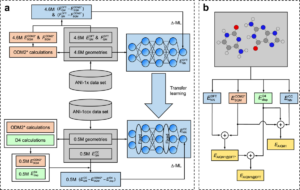

abstract = {Machine learning (ML) is increasingly becoming a common tool in computational chemistry. At the same time, the rapid development of ML methods requires a flexible software framework for designing custom workflows. MLatom 3 is a program package designed to leverage the power of ML to enhance typical computational chemistry simulations and to create complex workflows. This open-source package provides plenty of choice to the users who can run simulations with the command-line options, input files, or with scripts using MLatom as a Python package, both on their computers and on the online XACS cloud computing service at XACScloud.com. Computational chemists can calculate energies and thermochemical properties, optimize geometries, run molecular and quantum dynamics, and simulate (ro)vibrational, one-photon UV/vis absorption, and two-photon absorption spectra with ML, quantum mechanical, and combined models. The users can choose from an extensive library of methods containing pretrained ML models and quantum mechanical approximations such as AIQM1 approaching coupled-cluster accuracy. The developers can build their own models using various ML algorithms. The great flexibility of MLatom is largely due to the extensive use of the interfaces to many state-of-the-art software packages and libraries.},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

2023

Zhao, Qiyuan; Anstine, Dylan M.; Isayev, Olexandr; Savoie, Brett M.

Δ2 machine learning for reaction property prediction Journal Article

In: Chem. Sci., vol. 14, no. 46, pp. 13392–13401, 2023.

Abstract | Links | BibTeX | Tags: AIMNet, Machine learning potential, Organic reactions

@article{Zhao2023b,

title = {Δ^{2} machine learning for reaction property prediction},

author = {Qiyuan Zhao and Dylan M. Anstine and Olexandr Isayev and Brett M. Savoie},

doi = {10.1039/d3sc02408c},

year = {2023},

date = {2023-11-29},

urldate = {2023-11-29},

journal = {Chem. Sci.},

volume = {14},

number = {46},

pages = {13392--13401},

publisher = {Royal Society of Chemistry (RSC)},

abstract = {The emergence of Δ-learning models, whereby machine learning (ML) is used to predict a correction to a low-level energy calculation, provides a versatile route to accelerate high-level energy evaluations at a given geometry. However, Δ-learning models are inapplicable to reaction properties like heats of reaction and activation energies that require both a high-level geometry and energy evaluation. Here, a Δ2-learning model is introduced that can predict high-level activation energies based on low-level critical-point geometries. The Δ2 model uses an atom-wise featurization typical of contemporary ML interatomic potentials (MLIPs) and is trained on a dataset of ∼167 000 reactions, using the GFN2-xTB energy and critical-point geometry as a low-level input and the B3LYP-D3/TZVP energy calculated at the B3LYP-D3/TZVP critical point as a high-level target. The excellent performance of the Δ2 model on unseen reactions demonstrates the surprising ease with which the model implicitly learns the geometric deviations between the low-level and high-level geometries that condition the activation energy prediction. The transferability of the Δ2 model is validated on several external testing sets where it shows near chemical accuracy, illustrating the benefits of combining ML models with readily available physical-based information from semi-empirical quantum chemistry calculations. Fine-tuning of the Δ2 model on a small number of Gaussian-4 calculations produced a 35% accuracy improvement over DFT activation energy predictions while retaining xTB-level cost. The Δ2 model approach proves to be an efficient strategy for accelerating chemical reaction characterization with minimal sacrifice in prediction accuracy.},

keywords = {AIMNet, Machine learning potential, Organic reactions},

pubstate = {published},

tppubtype = {article}

}

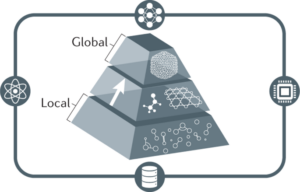

Fedik, Nikita; Nebgen, Benjamin; Lubbers, Nicholas; Barros, Kipton; Kulichenko, Maksim; Li, Ying Wai; Zubatyuk, Roman; Messerly, Richard; Isayev, Olexandr; Tretiak, Sergei

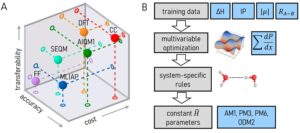

Synergy of semiempirical models and machine learning in computational chemistry Journal Article

In: J. Chem. Phys., vol. 159, no. 11, pp. 110901 , 2023.

Abstract | Links | BibTeX | Tags: Machine learning potential, Review

@article{Fedik2023,

title = {Synergy of semiempirical models and machine learning in computational chemistry},

author = {Nikita Fedik and Benjamin Nebgen and Nicholas Lubbers and Kipton Barros and Maksim Kulichenko and Ying Wai Li and Roman Zubatyuk and Richard Messerly and Olexandr Isayev and Sergei Tretiak},

doi = {10.1063/5.0151833},

year = {2023},

date = {2023-09-21},

urldate = {2023-09-21},

journal = {J. Chem. Phys.},

volume = {159},

number = {11},

pages = {110901 },

publisher = {AIP Publishing},

abstract = {Catalyzed by enormous success in the industrial sector, many research programs have been exploring data-driven, machine learning approaches. Performance can be poor when the model is extrapolated to new regions of chemical space, e.g., new bonding types, new many-body interactions. Another important limitation is the spatial locality assumption in model architecture, and this limitation cannot be overcome with larger or more diverse datasets. The outlined challenges are primarily associated with the lack of electronic structure information in surrogate models such as interatomic potentials. Given the fast development of machine learning and computational chemistry methods, we expect some limitations of surrogate models to be addressed in the near future; nevertheless spatial locality assumption will likely remain a limiting factor for their transferability. Here, we suggest focusing on an equally important effort\textemdashdesign of physics-informed models that leverage the domain knowledge and employ machine learning only as a corrective tool. In the context of material science, we will focus on semi-empirical quantum mechanics, using machine learning to predict corrections to the reduced-order Hamiltonian model parameters. The resulting models are broadly applicable, retain the speed of semiempirical chemistry, and frequently achieve accuracy on par with much more expensive ab initio calculations. These early results indicate that future work, in which machine learning and quantum chemistry methods are developed jointly, may provide the best of all worlds for chemistry applications that demand both high accuracy and high numerical efficiency.},

keywords = {Machine learning potential, Review},

pubstate = {published},

tppubtype = {article}

}

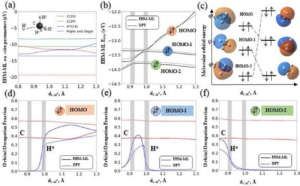

Moayedpour, Saeed; Bier, Imanuel; Wen, Wen; Dardzinski, Derek; Isayev, Olexandr; Marom, Noa

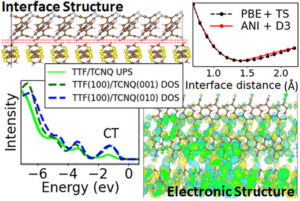

Structure Prediction of Epitaxial Organic Interfaces with Ogre, Demonstrated for Tetracyanoquinodimethane (TCNQ) on Tetrathiafulvalene (TTF) Journal Article

In: J. Phys. Chem. C, vol. 127, no. 21, pp. 10398–10410, 2023.

Abstract | Links | BibTeX | Tags: Crystal structure, Machine learning potential

@article{Moayedpour2023,

title = {Structure Prediction of Epitaxial Organic Interfaces with Ogre, Demonstrated for Tetracyanoquinodimethane (TCNQ) on Tetrathiafulvalene (TTF)},

author = {Saeed Moayedpour and Imanuel Bier and Wen Wen and Derek Dardzinski and Olexandr Isayev and Noa Marom},

doi = {10.1021/acs.jpcc.3c02384},

year = {2023},

date = {2023-06-01},

urldate = {2023-06-01},

journal = {J. Phys. Chem. C},

volume = {127},

number = {21},

pages = {10398--10410},

publisher = {American Chemical Society (ACS)},

abstract = {Highly ordered epitaxial interfaces between organic semiconductors are considered as a promising avenue for enhancing the performance of organic electronic devices including solar cells and transistors, thanks to their well-controlled, uniform electronic properties and high carrier mobilities. The electronic structure of epitaxial organic interfaces and their functionality in devices are inextricably linked to their structure. We present a method for structure prediction of epitaxial organic interfaces based on lattice matching followed by surface matching, implemented in the open-source Python package, Ogre. The lattice matching step produces domain-matched interfaces, where commensurability is achieved with different integer multiples of the substrate and film unit cells. In the surface matching step, Bayesian optimization (BO) is used to find the interfacial distance and registry between the substrate and film. The BO objective function is based on dispersion corrected deep neural network interatomic potentials. These are shown to be in qualitative agreement with density functional theory (DFT) regarding the optimal position of the film on top of the substrate and the ranking of putative interface structures. Ogre is used to investigate the epitaxial interface of 7,7,8,8-tetracyanoquinodimethane (TCNQ) on tetrathiafulvalene (TTF), whose electronic structure has been probed by ultraviolet photoemission spectroscopy (UPS), but whose structure had been hitherto unknown [Organic Electronics 2017, 48, 371]. We find that TCNQ(001) on top of TTF(100) is the most stable interface configuration, closely followed by TCNQ(010) on top of TTF(100). The density of states, calculated using DFT, is in excellent agreement with UPS, including the presence of an interface charge transfer state.},

keywords = {Crystal structure, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Inizan, Théo Jaffrelot; Plé, Thomas; Adjoua, Olivier; Ren, Pengyu; Gökcan, Hatice; Isayev, Olexandr; Lagardère, Louis; Piquemal, Jean-Philip

Scalable hybrid deep neural networks/polarizable potentials biomolecular simulations including long-range effects Journal Article

In: Chem. Sci., vol. 14, no. 20, pp. 5438–5452, 2023.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{JaffrelotInizan2023,

title = {Scalable hybrid deep neural networks/polarizable potentials biomolecular simulations including long-range effects},

author = {Th\'{e}o Jaffrelot Inizan and Thomas Pl\'{e} and Olivier Adjoua and Pengyu Ren and Hatice G\"{o}kcan and Olexandr Isayev and Louis Lagard\`{e}re and Jean-Philip Piquemal},

doi = {10.1039/d2sc04815a},

year = {2023},

date = {2023-05-24},

urldate = {2023-05-24},

journal = {Chem. Sci.},

volume = {14},

number = {20},

pages = {5438--5452},

publisher = {Royal Society of Chemistry (RSC)},

abstract = {\<jats:p\>Deep-HP is a scalable extension of the Tinker-HP multi-GPU molecular dynamics (MD) package enabling the use of Pytorch/TensorFlow Deep Neural Network (DNN) models.\</jats:p\>},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

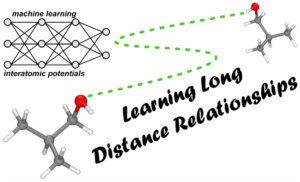

Anstine, Dylan M.; Isayev, Olexandr

Machine Learning Interatomic Potentials and Long-Range Physics Journal Article

In: J. Phys. Chem. A, vol. 127, no. 11, pp. 2417–2431, 2023, ISSN: 1520-5215.

Abstract | Links | BibTeX | Tags: AIMNet, ANI, Machine learning potential, Review

@article{Anstine2023,

title = {Machine Learning Interatomic Potentials and Long-Range Physics},

author = {Dylan M. Anstine and Olexandr Isayev},

doi = {10.1021/acs.jpca.2c06778},

issn = {1520-5215},

year = {2023},

date = {2023-03-23},

urldate = {2023-03-23},

journal = {J. Phys. Chem. A},

volume = {127},

number = {11},

pages = {2417--2431},

publisher = {American Chemical Society (ACS)},

abstract = {Advances in machine learned interatomic potentials (MLIPs), such as those using neural networks, have resulted in short-range models that can infer interaction energies with near ab initio accuracy and orders of magnitude reduced computational cost. For many atom systems, including macromolecules, biomolecules, and condensed matter, model accuracy can become reliant on the description of short- and long-range physical interactions. The latter terms can be difficult to incorporate into an MLIP framework. Recent research has produced numerous models with considerations for nonlocal electrostatic and dispersion interactions, leading to a large range of applications that can be addressed using MLIPs. In light of this, we present a Perspective focused on key methodologies and models being used where the presence of nonlocal physics and chemistry are crucial for describing system properties. The strategies covered include MLIPs augmented with dispersion corrections, electrostatics calculated with charges predicted from atomic environment descriptors, the use of self-consistency and message passing iterations to propagated nonlocal system information, and charges obtained via equilibration schemes. We aim to provide a pointed discussion to support the development of machine learning-based interatomic potentials for systems where contributions from only nearsighted terms are deficient.},

keywords = {AIMNet, ANI, Machine learning potential, Review},

pubstate = {published},

tppubtype = {article}

}

2022

Fedik, Nikita; Zubatyuk, Roman; Kulichenko, Maksim; Lubbers, Nicholas; Smith, Justin S.; Nebgen, Benjamin; Messerly, Richard; Li, Ying Wai; Boldyrev, Alexander I.; Barros, Kipton; Isayev, Olexandr; Tretiak, Sergei

Extending machine learning beyond interatomic potentials for predicting molecular properties Journal Article

In: Nat Rev Chem, vol. 6, no. 9, pp. 653–672, 2022.

Abstract | Links | BibTeX | Tags: Machine learning potential, Review

@article{Fedik2022,

title = {Extending machine learning beyond interatomic potentials for predicting molecular properties},

author = {Nikita Fedik and Roman Zubatyuk and Maksim Kulichenko and Nicholas Lubbers and Justin S. Smith and Benjamin Nebgen and Richard Messerly and Ying Wai Li and Alexander I. Boldyrev and Kipton Barros and Olexandr Isayev and Sergei Tretiak},

doi = {10.1038/s41570-022-00416-3},

year = {2022},

date = {2022-10-14},

urldate = {2022-10-14},

journal = {Nat Rev Chem},

volume = {6},

number = {9},

pages = {653--672},

publisher = {Springer Science and Business Media LLC},

abstract = {Machine learning (ML) is becoming a method of choice for modelling complex chemical processes and materials. ML provides a surrogate model trained on a reference dataset that can be used to establish a relationship between a molecular structure and its chemical properties. This Review highlights developments in the use of ML to evaluate chemical properties such as partial atomic charges, dipole moments, spin and electron densities, and chemical bonding, as well as to obtain a reduced quantum-mechanical description. We overview several modern neural network architectures, their predictive capabilities, generality and transferability, and illustrate their applicability to various chemical properties. We emphasize that learned molecular representations resemble quantum-mechanical analogues, demonstrating the ability of the models to capture the underlying physics. We also discuss how ML models can describe non-local quantum effects. Finally, we conclude by compiling a list of available ML toolboxes, summarizing the unresolved challenges and presenting an outlook for future development. The observed trends demonstrate that this field is evolving towards physics-based models augmented by ML, which is accompanied by the development of new methods and the rapid growth of user-friendly ML frameworks for chemistry.},

keywords = {Machine learning potential, Review},

pubstate = {published},

tppubtype = {article}

}

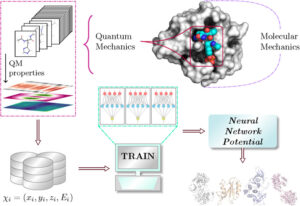

Gokcan, Hatice; Isayev, Olexandr

Learning molecular potentials with neural networks Journal Article

In: WIREs Comput Mol Sci, vol. 12, no. 2, pp. e1564, 2022.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential, Review

@article{Gokcan2021,

title = {Learning molecular potentials with neural networks},

author = {Hatice Gokcan and Olexandr Isayev},

doi = {10.1002/wcms.1564},

year = {2022},

date = {2022-07-14},

journal = {WIREs Comput Mol Sci},

volume = {12},

number = {2},

pages = {e1564},

publisher = {Wiley},

abstract = {\<jats:title\>Abstract\</jats:title\>\<jats:p\>The potential energy of molecular species and their conformers can be computed with a wide range of computational chemistry methods, from molecular mechanics to ab initio quantum chemistry. However, the proper choice of the computational approach based on computational cost and reliability of calculated energies is a dilemma, especially for large molecules. This dilemma is proved to be even more problematic for studies that require hundreds and thousands of calculations, such as drug discovery. On the other hand, driven by their pattern recognition capabilities, neural networks started to gain popularity in the computational chemistry community. During the last decade, many neural network potentials have been developed to predict a variety of chemical information of different systems. Neural network potentials are proved to predict chemical properties with accuracy comparable to quantum mechanical approaches but with the cost approaching molecular mechanics calculations. As a result, the development of more reliable, transferable, and extensible neural network potentials became an attractive field of study for researchers. In this review, we outlined an overview of the status of current neural network potentials and strategies to improve their accuracy. We provide recent examples of studies that prove the applicability of these potentials. We also discuss the capabilities and shortcomings of the current models and the challenges and future aspects of their development and applications. It is expected that this review would provide guidance for the development of neural network potentials and the exploitation of their applicability.\</jats:p\>\<jats:p\>This article is categorized under:\<jats:list list-type="simple"\>\<jats:list-item\>\<jats:p\>Data Science \> Artificial Intelligence/Machine Learning\</jats:p\>\</jats:list-item\>\<jats:list-item\>\<jats:p\>Molecular and Statistical Mechanics \> Molecular Interactions\</jats:p\>\</jats:list-item\>\<jats:list-item\>\<jats:p\>Software \> Molecular Modeling\</jats:p\>\</jats:list-item\>\</jats:list\>\</jats:p\>},

keywords = {ANI, Machine learning potential, Review},

pubstate = {published},

tppubtype = {article}

}

Kulik, H J; Hammerschmidt, T; Schmidt, J; Botti, S; Marques, M A L; Boley, M; Scheffler, M; Todorović, M; Rinke, P; Oses, C; Smolyanyuk, A; Curtarolo, S; Tkatchenko, A; Bartók, A P; Manzhos, S; Ihara, M; Carrington, T; Behler, J; Isayev, O; Veit, M; Grisafi, A; Nigam, J; Ceriotti, M; Schütt, K T; Westermayr, J; Gastegger, M; Maurer, R J; Kalita, B; Burke, K; Nagai, R; Akashi, R; Sugino, O; Hermann, J; Noé, F; Pilati, S; Draxl, C; Kuban, M; Rigamonti, S; Scheidgen, M; Esters, M; Hicks, D; Toher, C; Balachandran, P V; Tamblyn, I; Whitelam, S; Bellinger, C; Ghiringhelli, L M

Roadmap on Machine learning in electronic structure Journal Article

In: Electron. Struct., vol. 4, no. 2, pp. 023004, 2022.

Abstract | Links | BibTeX | Tags: Machine learning potential, Materials informatics, Review

@article{Kulik2022,

title = {Roadmap on Machine learning in electronic structure},

author = {H J Kulik and T Hammerschmidt and J Schmidt and S Botti and M A L Marques and M Boley and M Scheffler and M Todorovi\'{c} and P Rinke and C Oses and A Smolyanyuk and S Curtarolo and A Tkatchenko and A P Bart\'{o}k and S Manzhos and M Ihara and T Carrington and J Behler and O Isayev and M Veit and A Grisafi and J Nigam and M Ceriotti and K T Sch\"{u}tt and J Westermayr and M Gastegger and R J Maurer and B Kalita and K Burke and R Nagai and R Akashi and O Sugino and J Hermann and F No\'{e} and S Pilati and C Draxl and M Kuban and S Rigamonti and M Scheidgen and M Esters and D Hicks and C Toher and P V Balachandran and I Tamblyn and S Whitelam and C Bellinger and L M Ghiringhelli},

doi = {10.1088/2516-1075/ac572f},

year = {2022},

date = {2022-06-01},

urldate = {2022-06-01},

journal = {Electron. Struct.},

volume = {4},

number = {2},

pages = {023004},

publisher = {IOP Publishing},

abstract = {\<jats:title\>Abstract\</jats:title\>\<jats:p\>In recent years, we have been witnessing a paradigm shift in computational materials science. In fact, traditional methods, mostly developed in the second half of the XXth century, are being complemented, extended, and sometimes even completely replaced by faster, simpler, and often more accurate approaches. The new approaches, that we collectively label by machine learning, have their origins in the fields of informatics and artificial intelligence, but are making rapid inroads in all other branches of science. With this in mind, this Roadmap article, consisting of multiple contributions from experts across the field, discusses the use of machine learning in materials science, and share perspectives on current and future challenges in problems as diverse as the prediction of materials properties, the construction of force-fields, the development of exchange correlation functionals for density-functional theory, the solution of the many-body problem, and more. In spite of the already numerous and exciting success stories, we are just at the beginning of a long path that will reshape materials science for the many challenges of the XXIth century.\</jats:p\>},

keywords = {Machine learning potential, Materials informatics, Review},

pubstate = {published},

tppubtype = {article}

}

Zheng, Peikun; Yang, Wudi; Wu, Wei; Isayev, Olexandr; Dral, Pavlo O.

Toward Chemical Accuracy in Predicting Enthalpies of Formation with General-Purpose Data-Driven Methods Journal Article

In: J. Phys. Chem. Lett., vol. 13, no. 15, pp. 3479–3491, 2022.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{Zheng2022,

title = {Toward Chemical Accuracy in Predicting Enthalpies of Formation with General-Purpose Data-Driven Methods},

author = {Peikun Zheng and Wudi Yang and Wei Wu and Olexandr Isayev and Pavlo O. Dral},

doi = {10.1021/acs.jpclett.2c00734},

year = {2022},

date = {2022-04-21},

urldate = {2022-04-21},

journal = {J. Phys. Chem. Lett.},

volume = {13},

number = {15},

pages = {3479--3491},

publisher = {American Chemical Society (ACS)},

abstract = {Enthalpies of formation and reaction are important thermodynamic properties that have a crucial impact on the outcome of chemical transformations. Here we implement the calculation of enthalpies of formation with a general-purpose ANI-1ccx neural network atomistic potential. We demonstrate on a wide range of benchmark sets that both ANI-1ccx and our other general-purpose data-driven method AIQM1 approach the coveted chemical accuracy of 1 kcal/mol with the speed of semiempirical quantum mechanical methods (AIQM1) or faster (ANI-1ccx). It is remarkably achieved without specifically training the machine learning parts of ANI-1ccx or AIQM1 on formation enthalpies. Importantly, we show that these data-driven methods provide statistical means for uncertainty quantification of their predictions, which we use to detect and eliminate outliers and revise reference experimental data. Uncertainty quantification may also help in the systematic improvement of such data-driven methods.},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

2021

Zheng, Peikun; Zubatyuk, Roman; Wu, Wei; Isayev, Olexandr; Dral, Pavlo O.

Artificial intelligence-enhanced quantum chemical method with broad applicability Journal Article

In: Nat Commun, vol. 12, pp. 7022 , 2021, ISSN: 2041-1723.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{Zheng2021,

title = {Artificial intelligence-enhanced quantum chemical method with broad applicability},

author = {Peikun Zheng and Roman Zubatyuk and Wei Wu and Olexandr Isayev and Pavlo O. Dral},

doi = {10.1038/s41467-021-27340-2},

issn = {2041-1723},

year = {2021},

date = {2021-12-15},

urldate = {2021-12-15},

journal = {Nat Commun},

volume = {12},

pages = {7022 },

publisher = {Springer Science and Business Media LLC},

abstract = {High-level quantum mechanical (QM) calculations are indispensable for accurate explanation of natural phenomena on the atomistic level. Their staggering computational cost, however, poses great limitations, which luckily can be lifted to a great extent by exploiting advances in artificial intelligence (AI). Here we introduce the general-purpose, highly transferable artificial intelligence\textendashquantum mechanical method 1 (AIQM1). It approaches the accuracy of the gold-standard coupled cluster QM method with high computational speed of the approximate low-level semiempirical QM methods for the neutral, closed-shell species in the ground state. AIQM1 can provide accurate ground-state energies for diverse organic compounds as well as geometries for even challenging systems such as large conjugated compounds (fullerene C\<jats:sub\>60\</jats:sub\>) close to experiment. This opens an opportunity to investigate chemical compounds with previously unattainable speed and accuracy as we demonstrate by determining geometries of polyyne molecules\textemdashthe task difficult for both experiment and theory. Noteworthy, our method’s accuracy is also good for ions and excited-state properties, although the neural network part of AIQM1 was never fitted to these properties.},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Zubatyuk, Roman; Smith, Justin S.; Nebgen, Benjamin T.; Tretiak, Sergei; Isayev, Olexandr

Teaching a neural network to attach and detach electrons from molecules Journal Article

In: Nat Commun, vol. 12, no. 1, 2021, ISSN: 2041-1723.

Abstract | Links | BibTeX | Tags: AIMNet, Machine learning potential

@article{Zubatyuk2021,

title = {Teaching a neural network to attach and detach electrons from molecules},

author = {Roman Zubatyuk and Justin S. Smith and Benjamin T. Nebgen and Sergei Tretiak and Olexandr Isayev},

doi = {10.1038/s41467-021-24904-0},

issn = {2041-1723},

year = {2021},

date = {2021-08-11},

journal = {Nat Commun},

volume = {12},

number = {1},

publisher = {Springer Science and Business Media LLC},

abstract = {Interatomic potentials derived with Machine Learning algorithms such as Deep-Neural Networks (DNNs), achieve the accuracy of high-fidelity quantum mechanical (QM) methods in areas traditionally dominated by empirical force fields and allow performing massive simulations. Most DNN potentials were parametrized for neutral molecules or closed-shell ions due to architectural limitations. In this work, we propose an improved machine learning framework for simulating open-shell anions and cations. We introduce the AIMNet-NSE (Neural Spin Equilibration) architecture, which can predict molecular energies for an arbitrary combination of molecular charge and spin multiplicity with errors of about 2\textendash3 kcal/mol and spin-charges with error errors ~0.01e for small and medium-sized organic molecules, compared to the reference QM simulations. The AIMNet-NSE model allows to fully bypass QM calculations and derive the ionization potential, electron affinity, and conceptual Density Functional Theory quantities like electronegativity, hardness, and condensed Fukui functions. We show that these descriptors, along with learned atomic representations, could be used to model chemical reactivity through an example of regioselectivity in electrophilic aromatic substitution reactions.},

keywords = {AIMNet, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Zubatiuk, Tetiana; Nebgen, Benjamin; Lubbers, Nicholas; Smith, Justin S.; Zubatyuk, Roman; Zhou, Guoqing; Koh, Christopher; Barros, Kipton; Isayev, Olexandr; Tretiak, Sergei

Machine learned Hückel theory: Interfacing physics and deep neural networks Journal Article

In: vol. 154, no. 24, 2021, ISSN: 1089-7690.

Abstract | Links | BibTeX | Tags: Machine learning potential

@article{Zubatiuk2021b,

title = {Machine learned H\"{u}ckel theory: Interfacing physics and deep neural networks},

author = {Tetiana Zubatiuk and Benjamin Nebgen and Nicholas Lubbers and Justin S. Smith and Roman Zubatyuk and Guoqing Zhou and Christopher Koh and Kipton Barros and Olexandr Isayev and Sergei Tretiak},

doi = {10.1063/5.0052857},

issn = {1089-7690},

year = {2021},

date = {2021-06-28},

urldate = {2021-06-28},

volume = {154},

number = {24},

publisher = {AIP Publishing},

abstract = {\<jats:p\>The H\"{u}ckel Hamiltonian is an incredibly simple tight-binding model known for its ability to capture qualitative physics phenomena arising from electron interactions in molecules and materials. Part of its simplicity arises from using only two types of empirically fit physics-motivated parameters: the first describes the orbital energies on each atom and the second describes electronic interactions and bonding between atoms. By replacing these empirical parameters with machine-learned dynamic values, we vastly increase the accuracy of the extended H\"{u}ckel model. The dynamic values are generated with a deep neural network, which is trained to reproduce orbital energies and densities derived from density functional theory. The resulting model retains interpretability, while the deep neural network parameterization is smooth and accurate and reproduces insightful features of the original empirical parameterization. Overall, this work shows the promise of utilizing machine learning to formulate simple, accurate, and dynamically parameterized physics models.\</jats:p\>},

keywords = {Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Artrith, Nongnuch; Butler, Keith T.; Coudert, François-Xavier; Han, Seungwu; Isayev, Olexandr; Jain, Anubhav; Walsh, Aron

Best practices in machine learning for chemistry Journal Article

In: Nat. Chem., vol. 13, no. 6, pp. 505–508, 2021, ISSN: 1755-4349.

Abstract | Links | BibTeX | Tags: Machine learning potential, Review

@article{Artrith2021,

title = {Best practices in machine learning for chemistry},

author = {Nongnuch Artrith and Keith T. Butler and Fran\c{c}ois-Xavier Coudert and Seungwu Han and Olexandr Isayev and Anubhav Jain and Aron Walsh},

doi = {10.1038/s41557-021-00716-z},

issn = {1755-4349},

year = {2021},

date = {2021-06-15},

urldate = {2021-06-15},

journal = {Nat. Chem.},

volume = {13},

number = {6},

pages = {505--508},

publisher = {Springer Science and Business Media LLC},

abstract = {Statistical tools based on machine learning are becoming integrated into chemistry research workflows. We discuss the elements necessary to train reliable, repeatable and reproducible models, and recommend a set of guidelines for machine learning reports.},

keywords = {Machine learning potential, Review},

pubstate = {published},

tppubtype = {article}

}

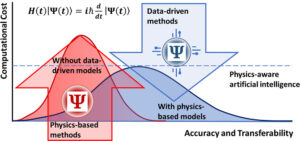

Zubatiuk, Tetiana; Isayev, Olexandr

Development of Multimodal Machine Learning Potentials: Toward a Physics-Aware Artificial Intelligence Journal Article

In: Acc. Chem. Res., vol. 54, no. 7, pp. 1575–1585, 2021, ISSN: 1520-4898.

Links | BibTeX | Tags: AIMNet, Machine learning potential

@article{Zubatiuk2021,

title = {Development of Multimodal Machine Learning Potentials: Toward a Physics-Aware Artificial Intelligence},

author = {Tetiana Zubatiuk and Olexandr Isayev},

doi = {10.1021/acs.accounts.0c00868},

issn = {1520-4898},

year = {2021},

date = {2021-04-06},

urldate = {2021-04-06},

journal = {Acc. Chem. Res.},

volume = {54},

number = {7},

pages = {1575--1585},

publisher = {American Chemical Society (ACS)},

keywords = {AIMNet, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

2020

Gao, Xiang; Ramezanghorbani, Farhad; Isayev, Olexandr; Smith, Justin S.; Roitberg, Adrian E.

TorchANI: A Free and Open Source PyTorch-Based Deep Learning Implementation of the ANI Neural Network Potentials Journal Article

In: J. Chem. Inf. Model., vol. 60, no. 7, pp. 3408–3415, 2020.

Links | BibTeX | Tags: ANI, Machine learning potential

@article{Gao2020,

title = {TorchANI: A Free and Open Source PyTorch-Based Deep Learning Implementation of the ANI Neural Network Potentials},

author = {Xiang Gao and Farhad Ramezanghorbani and Olexandr Isayev and Justin S. Smith and Adrian E. Roitberg},

doi = {10.1021/acs.jcim.0c00451},

year = {2020},

date = {2020-07-27},

urldate = {2020-07-27},

journal = {J. Chem. Inf. Model.},

volume = {60},

number = {7},

pages = {3408--3415},

publisher = {American Chemical Society (ACS)},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Devereux, Christian; Smith, Justin S.; Huddleston, Kate K.; Barros, Kipton; Zubatyuk, Roman; Isayev, Olexandr; Roitberg, Adrian E.

Extending the Applicability of the ANI Deep Learning Molecular Potential to Sulfur and Halogens Journal Article

In: J. Chem. Theory Comput., vol. 16, no. 7, pp. 4192–4202, 2020, ISSN: 1549-9626.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{Devereux2020,

title = {Extending the Applicability of the ANI Deep Learning Molecular Potential to Sulfur and Halogens},

author = {Christian Devereux and Justin S. Smith and Kate K. Huddleston and Kipton Barros and Roman Zubatyuk and Olexandr Isayev and Adrian E. Roitberg},

doi = {10.1021/acs.jctc.0c00121},

issn = {1549-9626},

year = {2020},

date = {2020-07-14},

urldate = {2020-07-14},

journal = {J. Chem. Theory Comput.},

volume = {16},

number = {7},

pages = {4192--4202},

publisher = {American Chemical Society (ACS)},

abstract = {Machine learning (ML) methods have become powerful, predictive tools in a wide range of applications, such as facial recognition and autonomous vehicles. In the sciences, computational chemists and physicists have been using ML for the prediction of physical phenomena, such as atomistic potential energy surfaces and reaction pathways. Transferable ML potentials, such as ANI-1x, have been developed with the goal of accurately simulating organic molecules containing the chemical elements H, C, N, and O. Here, we provide an extension of the ANI-1x model. The new model, dubbed ANI-2x, is trained to three additional chemical elements: S, F, and Cl. Additionally, ANI-2x underwent torsional refinement training to better predict molecular torsion profiles. These new features open a wide range of new applications within organic chemistry and drug development. These seven elements (H, C, N, O, F, Cl, and S) make up ∼90% of drug-like molecules. To show that these additions do not sacrifice accuracy, we have tested this model across a range of organic molecules and applications, including the COMP6 benchmark, dihedral rotations, conformer scoring, and nonbonded interactions. ANI-2x is shown to accurately predict molecular energies compared to density functional theory with a ∼106 factor speedup and a negligible slowdown compared to ANI-1x and shows subchemical accuracy across most of the COMP6 benchmark. The resulting model is a valuable tool for drug development which can potentially replace both quantum calculations and classical force fields for a myriad of applications.},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

Smith, Justin S.; Zubatyuk, Roman; Nebgen, Benjamin; Lubbers, Nicholas; Barros, Kipton; Roitberg, Adrian E.; Isayev, Olexandr; Tretiak, Sergei

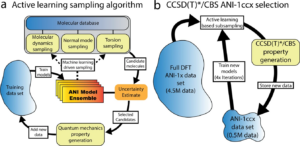

The ANI-1ccx and ANI-1x data sets, coupled-cluster and density functional theory properties for molecules Journal Article

In: Sci Data, vol. 7, no. 1, 2020, ISSN: 2052-4463.

Abstract | Links | BibTeX | Tags: ANI, dataset, Machine learning potential

@article{Smith2020,

title = {The ANI-1ccx and ANI-1x data sets, coupled-cluster and density functional theory properties for molecules},

author = {Justin S. Smith and Roman Zubatyuk and Benjamin Nebgen and Nicholas Lubbers and Kipton Barros and Adrian E. Roitberg and Olexandr Isayev and Sergei Tretiak},

doi = {10.1038/s41597-020-0473-z},

issn = {2052-4463},

year = {2020},

date = {2020-01-01},

urldate = {2020-01-01},

journal = {Sci Data},

volume = {7},

number = {1},

publisher = {Springer Science and Business Media LLC},

abstract = {Maximum diversification of data is a central theme in building generalized and accurate machine learning (ML) models. In chemistry, ML has been used to develop models for predicting molecular properties, for example quantum mechanics (QM) calculated potential energy surfaces and atomic charge models. The ANI-1x and ANI-1ccx ML-based general-purpose potentials for organic molecules were developed through active learning; an automated data diversification process. Here, we describe the ANI-1x and ANI-1ccx data sets. To demonstrate data diversity, we visualize it with a dimensionality reduction scheme, and contrast against existing data sets. The ANI-1x data set contains multiple QM properties from 5 M density functional theory calculations, while the ANI-1ccx data set contains 500 k data points obtained with an accurate CCSD(T)/CBS extrapolation. Approximately 14 million CPU core-hours were expended to generate this data. Multiple QM calculated properties for the chemical elements C, H, N, and O are provided: energies, atomic forces, multipole moments, atomic charges, etc. We provide this data to the community to aid research and development of ML models for chemistry.},

keywords = {ANI, dataset, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}

2017

Smith, Justin S.; Isayev, Olexandr; Roitberg, Adrian E.

ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost Journal Article

In: Chemical Science, iss. 8, pp. 3192-3203, 2017.

Abstract | Links | BibTeX | Tags: ANI, Machine learning potential

@article{Smith2017,

title = {ANI-1: an extensible neural network potential with DFT accuracy at force field computational cost},

author = {Justin S. Smith and Olexandr Isayev and Adrian E. Roitberg },

url = {https://olexandrisayev.com/wp-content/uploads/2024/02/c6sc05720a.pdf},

doi = {10.1039/C6SC05720A},

year = {2017},

date = {2017-02-08},

urldate = {2017-02-08},

journal = {Chemical Science},

issue = {8},

pages = {3192-3203},

abstract = {Deep learning is revolutionizing many areas of science and technology, especially image, text, and speech recognition. In this paper, we demonstrate how a deep neural network (NN) trained on quantum mechanical (QM) DFT calculations can learn an accurate and transferable potential for organic molecules. We introduce ANAKIN-ME (Accurate NeurAl networK engINe for Molecular Energies) or ANI for short. ANI is a new method designed with the intent of developing transferable neural network potentials that utilize a highly-modified version of the Behler and Parrinello symmetry functions to build single-atom atomic environment vectors (AEV) as a molecular representation. AEVs provide the ability to train neural networks to data that spans both configurational and conformational space, a feat not previously accomplished on this scale. We utilized ANI to build a potential called ANI-1, which was trained on a subset of the GDB databases with up to 8 heavy atoms in order to predict total energies for organic molecules containing four atom types: H, C, N, and O. To obtain an accelerated but physically relevant sampling of molecular potential surfaces, we also proposed a Normal Mode Sampling (NMS) method for generating molecular conformations. Through a series of case studies, we show that ANI-1 is chemically accurate compared to reference DFT calculations on much larger molecular systems (up to 54 atoms) than those included in the training data set.},

keywords = {ANI, Machine learning potential},

pubstate = {published},

tppubtype = {article}

}