Machine Learning for Scalable Chemical Research

Experiment Automation

Building ML-enabled workflows for scalable, reliable, and reproducible chemical experimentation in cloud laboratories.

My research in experiment automation focuses on building machine-learning-enabled workflows that make chemical experimentation more scalable, reliable, and reproducible. Modern cloud laboratory and robotic platforms can execute large numbers of experiments remotely around the clock, but they introduce a new bottleneck: ensuring data quality and interpretability without constant expert oversight. Automation shifts failure modes from familiar human errors toward more subtle issues such as instrument drift, contamination, and analytical artifacts that are difficult to detect at scale. Addressing these challenges requires tight integration between automated execution, real-time quality control, adaptive experimental design, and robust data infrastructure.

Cloud Laboratories and Automated Quality Control

A central theme of my recent work is improving the reliability of automated experiments conducted in cloud laboratory environments. Carnegie Mellon University established the world’s first academic cloud laboratory in 2021 through a partnership with Emerald Cloud Lab, providing on-demand access to over 130 different instrument types remotely controlled through unified software interfaces. This infrastructure enables experiments to run continuously with robotic instrumentation executing protocols exactly as specified by researchers’ online commands, with all generated data automatically organized, connected to experimental commands, and stored for reproducibility.

In practice, however, autonomous experimentation introduces new failure modes that require automated detection. I developed a machine learning framework for automated anomaly detection in high-performance liquid chromatography (HPLC) experiments executed in cloud laboratory settings, published in Digital Discovery. The system addresses common failure signatures including air bubble contamination and instrument drift that can corrupt experimental results if not detected early.

The framework provides expert-level quality control without human oversight, flagging affected HPLC runs in real time and enabling immediate intervention before errors propagate through downstream analysis pipelines. This capability supports early detection of instrument failures before they corrupt entire experimental batches, automated identification of contamination patterns that would traditionally require expert manual review, continuous quality monitoring across large-scale experimental campaigns running thousands of samples, and substantially reduced turnaround time for identifying and addressing systematic issues that could otherwise invalidate weeks of experimental work.

Automated Synthesis and ML-Guided Screening

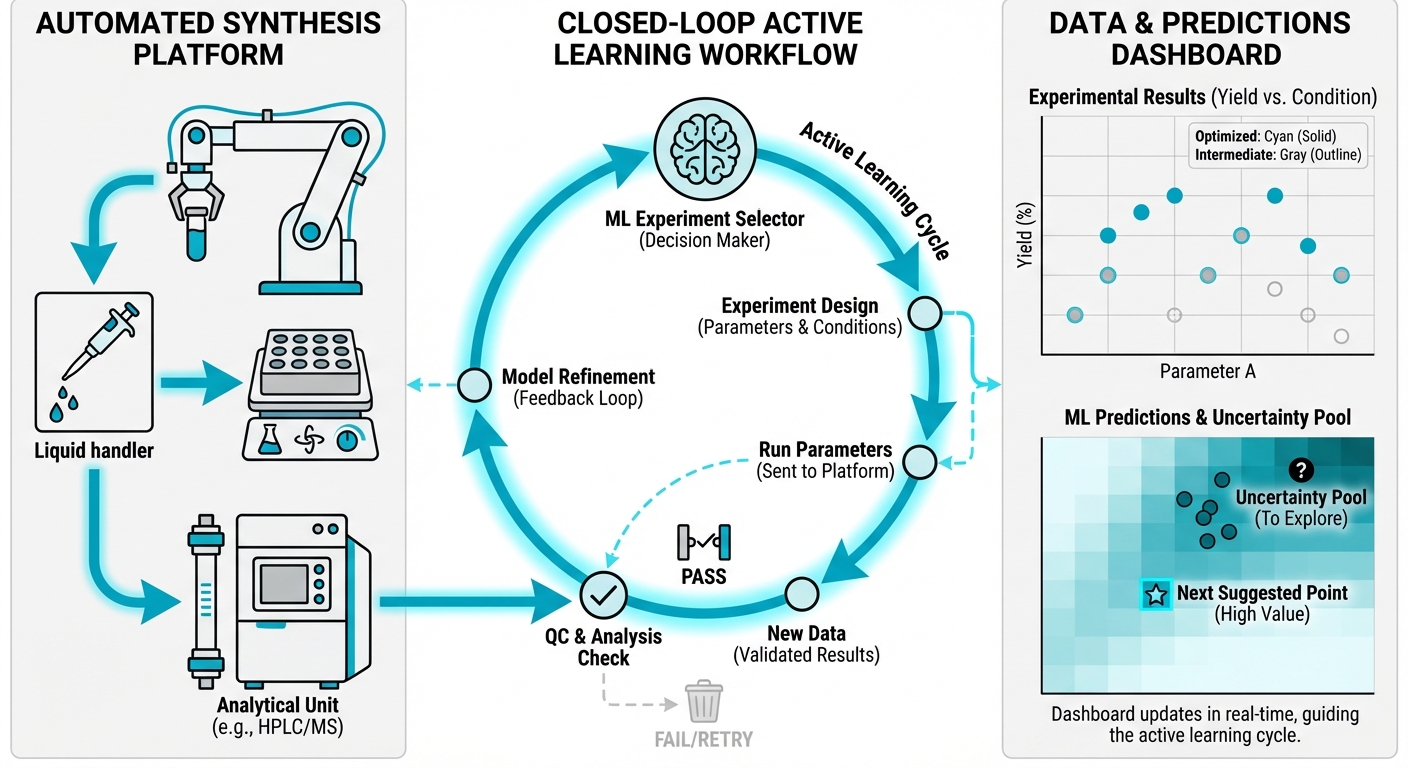

Experiment automation becomes particularly impactful when paired with machine learning-guided selection strategies that determine what experiments to run next. My collaboration on 19F MRI contrast agent discovery, published in JACS, demonstrates how automated polymer synthesis integrated with ML-driven screening can accelerate materials discovery beyond what is achievable through traditional approaches.

Modern polymer science faces what might be called the curse of multidimensionality: the chemical space of monomer combinations, compositions, and processing conditions overwhelms traditional synthesis and characterization capabilities, limiting systematic investigation of structure-property relationships. Our approach addresses this challenge through a software-controlled continuous polymer synthesis platform enabling rapid iterative experimental-computational cycles, AutoML-driven discovery that uses machine learning rather than human expert knowledge to explore chemistry autonomously through intelligent trial and error, and efficient chemical space exploration that narrowed 50,000 potential polymer compositions to 397 experimentally synthesized candidates through eight refinement cycles—exploring less than 0.9% of the overall compositional space while identifying more than 10 copolymer compositions that outperformed state-of-the-art contrast agents by as much as 50%.

This work exemplifies a broader principle that guides my research: the highest leverage often comes from tight coupling between execution and learning, where automated experimentation is designed to generate the right data for downstream modeling, and models are designed to guide what experiments to run next. The system transfers decision power from human experts to AI systems that can evaluate options more systematically and without the cognitive biases that affect human experimentalists.

Workflow Software and APIs

Automation requires more than robotics and instrumentation—it also demands software infrastructure that supports workflow composition, provenance tracking, and integration with machine learning models. My work contributes to this infrastructure layer through tools that make ML-enhanced computational chemistry workflows easier to build and deploy.

TorchANI, published in the Journal of Chemical Information and Modeling with over 250 citations, provides a PyTorch-based implementation of ANI neural network potentials designed for easy integration into automated workflows. The framework emphasizes lightweight cross-platform design for rapid prototyping, automatic differentiation enabling force training without additional code requirements, and seamless integration with ASE (Atomic Simulation Environment) and RDKit for molecular workflow automation.

AFLOW-ML provides a RESTful API for machine learning predictions that can be called as part of automated pipelines for materials and chemistry applications. The system offers open API access to continuously updated ML algorithms, cloud-based applications that streamline adoption of machine learning methods, and property predictions for electronic, thermal, and mechanical properties trained on extensive computational databases. These tools enable standardized interfaces between experimental hardware and computational models, reproducible workflow definitions that can be shared and version-controlled, automatic provenance tracking for all experimental and computational steps, and seamless integration of ML predictions into decision-making loops that orchestrate automated experimentation.

Active Learning and Closed-Loop Experimentation

A central goal of experiment automation is not merely running more experiments, but running more informative experiments. My research emphasizes active learning and adaptive sampling strategies that prioritize experiments expected to reduce model uncertainty, reveal new physical regimes, or improve coverage of relevant chemical space.

The foundational active learning methodology, published in the Journal of Chemical Physics with over 680 citations, introduced a fully automated approach for dataset generation using Query by Committee (QBC) strategies. The method uses disagreement between an ensemble of ML potentials to infer prediction reliability, automatically sampling regions of chemical space where models fail to accurately predict outcomes. This approach mitigates human biases in deciding what training data to collect and enables more efficient use of expensive computational or experimental resources.

In automated settings, active learning creates a practical route to closed-loop experimentation where models propose the next set of experiments, laboratory systems execute them, and results update both predictive and decision models. Application to drug discovery lead optimization demonstrated that active learning-guided workflows can achieve 20-fold efficiency gains compared to brute-force screening, identifying improved binding compounds from thousands of candidates through only hundreds of targeted simulations.

I view this integration as a key step toward autonomous discovery systems that combine automated experiment execution through robotics and cloud laboratories, automated measurement and quality control through analytical anomaly detection, model-guided selection through active learning and Bayesian experimental design, and automated data infrastructure supporting provenance tracking, versioning, and data reuse across projects.

Outlook and Future Directions

Looking forward, my research aims to strengthen the connection between automation and chemical insight. Near-term directions include multi-modal quality control that expands automated anomaly detection beyond single instruments to integrated analytical stacks combining chromatography, spectroscopy, and mass spectrometry; distribution shift handling that improves failure detection when experimental conditions drift from training data distributions, a common challenge in long-running automated campaigns; uncertainty-aware policies that explicitly trade off exploration of novel chemical space against exploitation of known productive regions; and platform capabilities that position experiment automation as core infrastructure for chemistry and drug discovery rather than specialized equipment for individual projects.

More broadly, I am interested in experiment automation as a platform capability where robust reproducible pipelines and well-curated machine-readable datasets enable faster iteration across both computational and experimental teams. The long-term vision is chemistry research conducted through seamless human-machine collaboration, where automated systems handle routine execution and quality control while human researchers focus on hypothesis generation, interpretation, and strategic direction. By combining automation with machine learning guidance, we can accelerate discovery while improving reproducibility—addressing two of the most significant challenges facing modern chemical research.

Key Publications

Democratizing Reaction Kinetics through Machine Vision and Learning

(2025)

Democratizing Reaction Kinetics through Machine Vision and Learning.

Machine learning anomaly detection of automated HPLC experiments in the cloud laboratory

Digital Discovery, 4, 3445–3454 (2025)

Autonomous experiments are vulnerable to unforeseen adverse events.

Design of Tough 3D Printable Elastomers with Human‐in‐the‐Loop Reinforcement Learning

Angewandte Chemie, 137 (2025)

Abstract The development of high‐performance elastomers for additive manufacturing requires overcoming complex property trade‐offs that challenge conventional material discovery pipelines.

Machine-Learning-Guided Discovery of 19 F MRI Agents Enabled by Automated Copolymer Synthesis

Journal of the American Chemical Society, 143, 17677–17689 (2021)

Machine-Learning-Guided Discovery of 19 F MRI Agents Enabled by Automated Copolymer Synthesis.

AFLOW-ML: A RESTful API for machine-learning predictions of materials properties

Computational Materials Science, 152, 134–145 (2018)

AFLOW-ML: A RESTful API for machine-learning predictions of materials properties.

Software & Tools

Frequently Asked Questions

What is the CMU Cloud Laboratory and how does it advance chemical research?

Carnegie Mellon University established the world's first academic cloud laboratory in 2021 through a partnership with Emerald Cloud Lab, providing on-demand access to over 130 different instrument types remotely controlled through unified software interfaces. Experiments run continuously with robotic instrumentation executing protocols as specified by researchers' online commands, with all generated data automatically organized and stored for reproducibility.

How does machine learning improve quality control in automated experiments?

Our ML framework for automated HPLC anomaly detection provides expert-level quality control without human oversight, flagging affected runs in real time. The system addresses common failure signatures including air bubble contamination and instrument drift, enabling early detection of instrument failures before they corrupt entire experimental batches and substantially reducing turnaround time for identifying systematic issues.

What efficiency gains does active learning provide in automated experimentation?

Active learning-guided workflows can achieve 20-fold efficiency gains compared to brute-force screening. Our polymer synthesis work narrowed 50,000 potential compositions to 397 candidates through eight refinement cycles—exploring less than 0.9% of the overall compositional space while identifying more than 10 copolymer compositions that outperformed state-of-the-art 19F MRI contrast agents by up to 50%.

What is TorchANI and how does it enable workflow automation?

TorchANI is a PyTorch-based implementation of ANI neural network potentials with over 250 citations, designed for easy integration into automated workflows. It features lightweight cross-platform design for rapid prototyping, automatic differentiation enabling force training without additional code, and seamless integration with ASE and RDKit for molecular workflow automation.